No sooner had students rejoiced that they now had AI generation tools to cope with all that bothersome essays than AI detectors came onto the scene. Akin to plagiarism detection tools earlier, they promise to end tech-powered cheating and promote fairness in academia.

Yet are they indeed any good? How do they work? Can they really tell the work of ChatGPT and the likes from your honest output full of sweat, tears, and caffeine? Will they save education from reverting to pen-and-paper classroom assessments and discussions as the only reliable grading techniques?

What Is an AI Content Detector?

AI detector tools have been evolving along with AI generative tools for some time now. Automatic text detection has been applied, for example, for spam filtering, social media content moderation, and keyword detection, to identify the text as relevant for a specific topic and thus worthy of showing up in the search results. However, with the growing text-generating capabilities of AI tools, the need has arisen to detect whether a specific text was created by a human or automatically fabricated. Why establishing the line between the two is essential?

The matter is much more complex than teachers finding out which students did their assignments themselves and which ones asked ChatGPT, “Write my paper for literature!” and cheated. This side of things got the lion’s share of attention lately, but there are far more important implications of AI text generation than academic dishonesty.

Social media platforms are concerned with bots and fake accounts. Businesses are wary of fake reviews and other fraudulent activities. Government and law enforcement agencies want to be able to fight disinformation, propaganda, conspiracy theories, and criminal activities such as impersonation scams and cyberbullying. Finally, researchers want to be able to assess the credibility of information and the reliability of the source.

Can automated detectors help with uncovering AI-generated text, or should it all fall on the shoulders of humans and their intuition? Thankfully, AI detectors can be of help.

How Do AI Detectors Work?

At first, it might seem that the text generated by the viral ChatGPT or other generative AI linguistic models such as Jasper or Rytr is absolutely human-like and undistinguishable from content produced traditionally by a professional writer. However, believe it or not, AI tools don’t really understand the meaning of words. They are trained models that operate numbers, trying to balance the predictability of grammar and lexical structures with “creativity” in combining various elements. They base their predictions on texts they have been trained upon. If they err on the side of predictability and stick with what they’ve been fed, they sound boring and repetitive. If they err on the side of “creativity,” they might create outlandishly sounding sentences with no meaning. If they hit the sweet spot, they produce human-sounding original content that rings true to the reader. However, this content will still be built on algorithmic predictions, thus being predictable to other AI linguistic models “reading” the text.

That’s the logic behind AI detectors. They reverse engineer the process based on the known AI-generated texts and measure patterns and predictability via linguistic analysis.

During linguistic analysis, AI detects the frequency of a specific word in each text, specific sequences of words, grammar, sentence structure, and semantics, looking for patterns. When comparing the text against the AI texts from the database, detectors use two main metrics: perplexity and burstiness. Perplexity measures how well AI can predict a sample. If perplexity is low, it means AI isn’t very “surprised” by the word choice, hence, the text is likely to be generated. If perplexity is high, the AI’s algorithmic thinking is “confused” by the text, which is likely to be a product of human creativity. Burstiness measures how often a text tends to use certain words and phrases seen often in the data AI was trained on. Lack of variation and repetitiveness are indicators of AI-generated text.

By combining linguistic analysis with measuring perplexity and burstiness, AI detectors make an educated guess about how likely the text is human-written.

Are they effective?

Whether it’s good or bad news for you, there is no foolproof way to detect if a text is AI-generated or not. All we can speak of here is probability.

Moreover, different detection tools give varying results that sometimes are off by 20 to 60 percent. Some of them greenlight AI-generated texts as human-written, while others tend to be more suspicious and flag human content as “robotic.” However, by applying several detectors and comparing the results, you can more or less accurately assess the likelihood of text being natural or artificial.

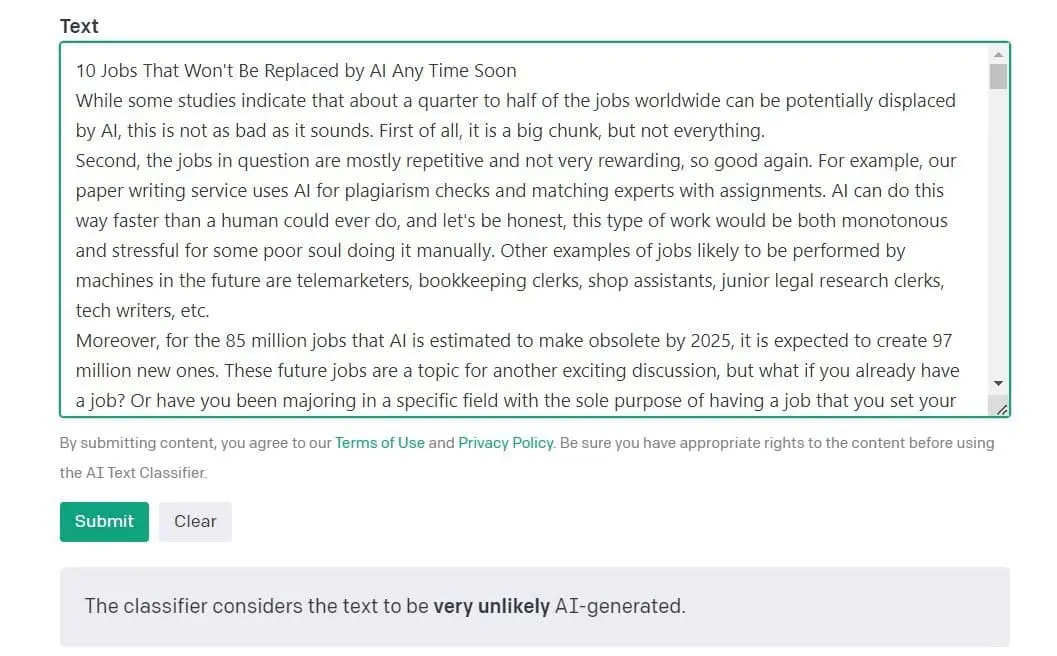

For this article, I conducted an experiment using two pieces of text: the final draft of an earlier blog about jobs that won’t be replaced by AI and a text on the same topic that I asked ChatGPT to create for me. While some detectors misattributed AI’s creation as human or mixed, and some were suspicious about particular paragraphs of my authorship, pulling results from different detectors is very likely to give you the full picture.

Based on the analysis of fragments misattributed to AI in human text, I would say an average AI content detector tends to see short sentences and objective, dispassionate tone as AI features. It also might mislabel friendly human-sounding phrases that can be easily categorized as “attention grabbers,” such as short rhetoric questions (e.g., “Should you worry?”) or call-to-action (e.g., “Let’s find out!”). Such phrases occur naturally in blogs and entertainment articles but might be overused in marketing copy. The first widely available AI tools, such as Jasper, usually targeted marketing niche, so it’s no surprise AI detection tools were trained on significant volumes of marketing content. They might misinterpret upbeat tone, short sentences, and engaging little hooks as generated content because these features oversaturated the generated texts AI was fed.

The line between AI and human-generated text is likely to be blurred as more advanced models will become available. It’s already happening with GPT-4. According to Irene Solaiman, policy director at AI start-up Hugging Face, there is no magical solution to AI detection. “These detection models are playing catch-up, and they’re not going to be as good [as AI text generators],” she said in an interview with Euronews. The same source cited Muhammad Abdul-Mageed, Assistant professor at the University of British Columbia, as he likened developing an effective detection tool to chasing a moving target.

10 Free AI Detector Tools and Their Accuracy

Still, despite all the caveats, what are your options if you want to see whether a text you encounter is a real deal or a fabrication? There is a plethora of tools, paid and free. Here is a quick overview of 10 free AI detectors – some putting a cap on the character count you can check in one go, some giving unlimited access to individuals.

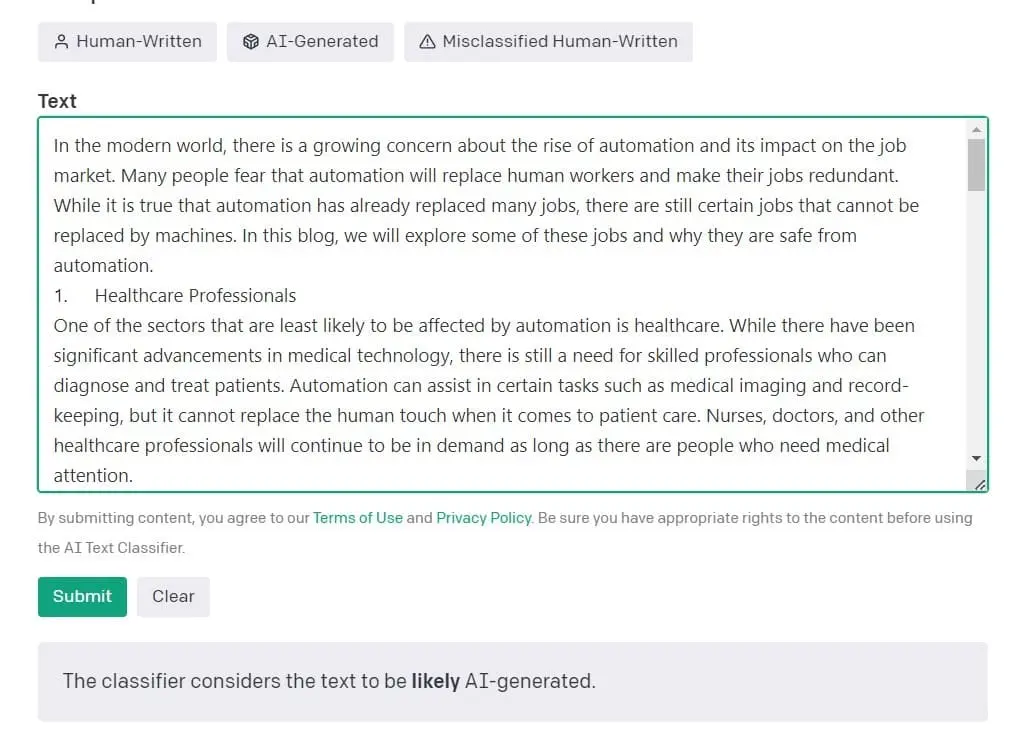

Result for human text:

Result for ChatGPT-generated text:

Text classifier from OpenAI gives you an assessment in terms of likelihood. For example, “The text is likely to be AI-generated” or “The text is very unlikely to be AI-generated.”

Unfortunately, it is rather inept when it comes to detecting content generated by its sister program ChatGPT. Although it succeeded this time, it misfired more than once earlier with other samples labeling them as “unclear” or only “likely to have AI-generated fragments.” Since both are based on the same linguistic model developed by OpenAI, it makes sense. ChatGPT imitates human-sounding text to the best of its ability, and Text Classifier doesn’t know any better because they are cut from the same cloth, so to speak. So ChatGPT’s efforts are as human as it gets in OpenAI’s book.

However, this is likely to change in the nearest future. Experts working for OpenAI have claimed that a new watermarking system is underway that will stamp unseen signals onto generated texts to make detecting them easier. This should resolve potential ethical problems.

It wasn’t confirmed officially just yet, but it aligns with OpenAI being worried about the effects their tools are producing. According to Mira Murati, a chief technology officer at OpenAI, they wanted to disrupt education with personalized learning, disability assistance, adaptive testing, and bias-free grading. I am sure that witnessing how public schools and entire countries ban ChatGPT due to privacy concerns and the influx of generated essays isn’t making them happy.

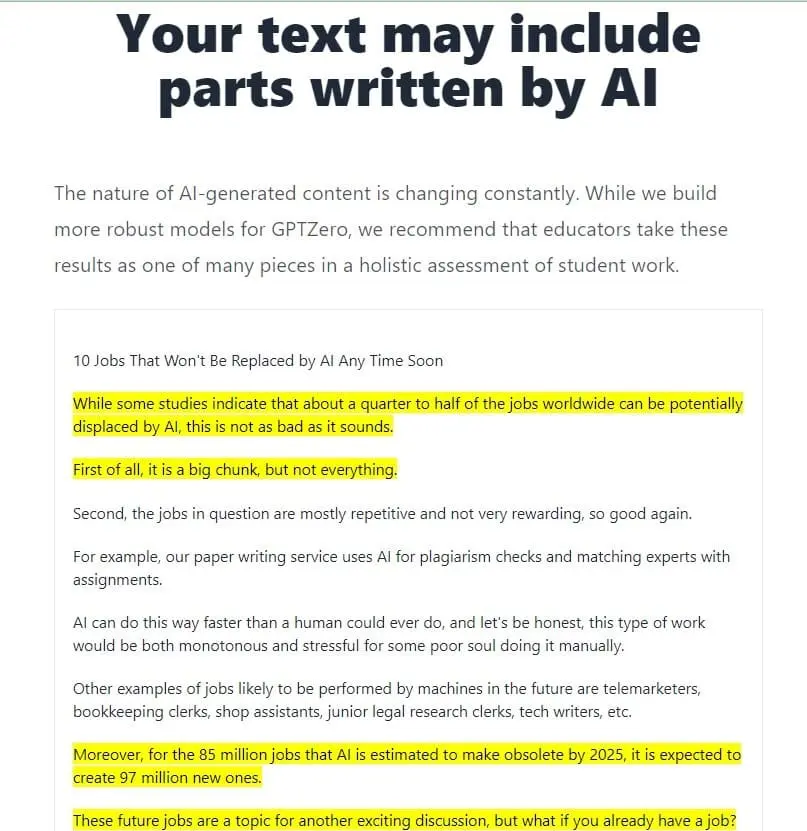

Results for human text:

Results for AI-generated text:

GPTZero was developed by a Princeton student specifically for teachers to inspect papers for AI-generated content. It is very efficient in detecting robotic text. However, it tends to err on the side of caution and often suspects some human-written passages of being AI-created.

It gives its estimation in terms of likelihood but also provides stats for perplexity and burstiness, so you can judge yourself. It is free and unlimited and doesn’t require registration to use it. To check your text, you can either copy and paste it into the text window or upload a file from your device.

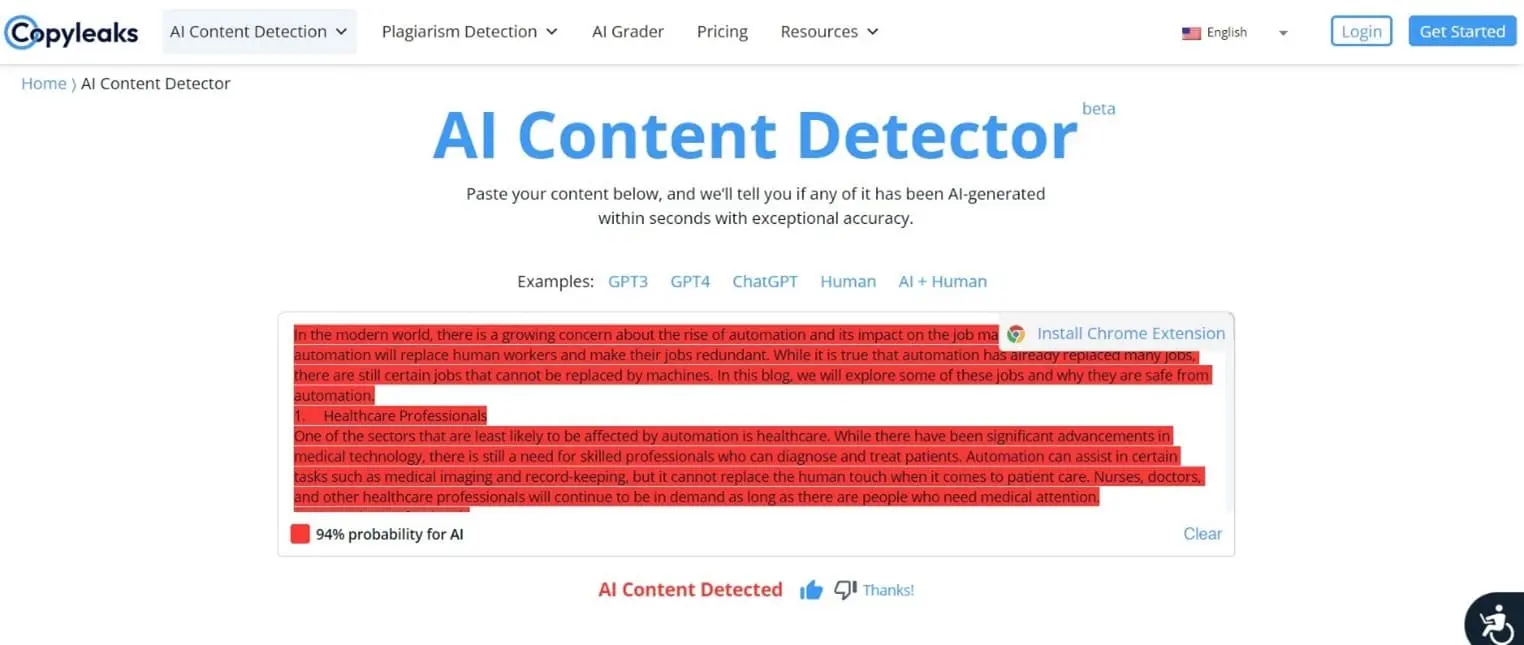

AI Content Detector at Copyleaks

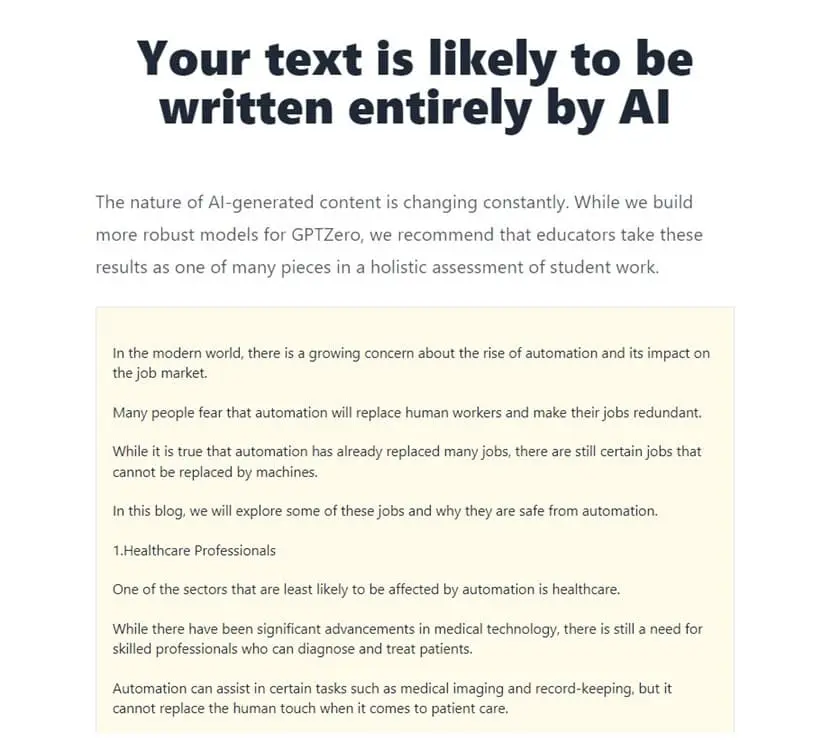

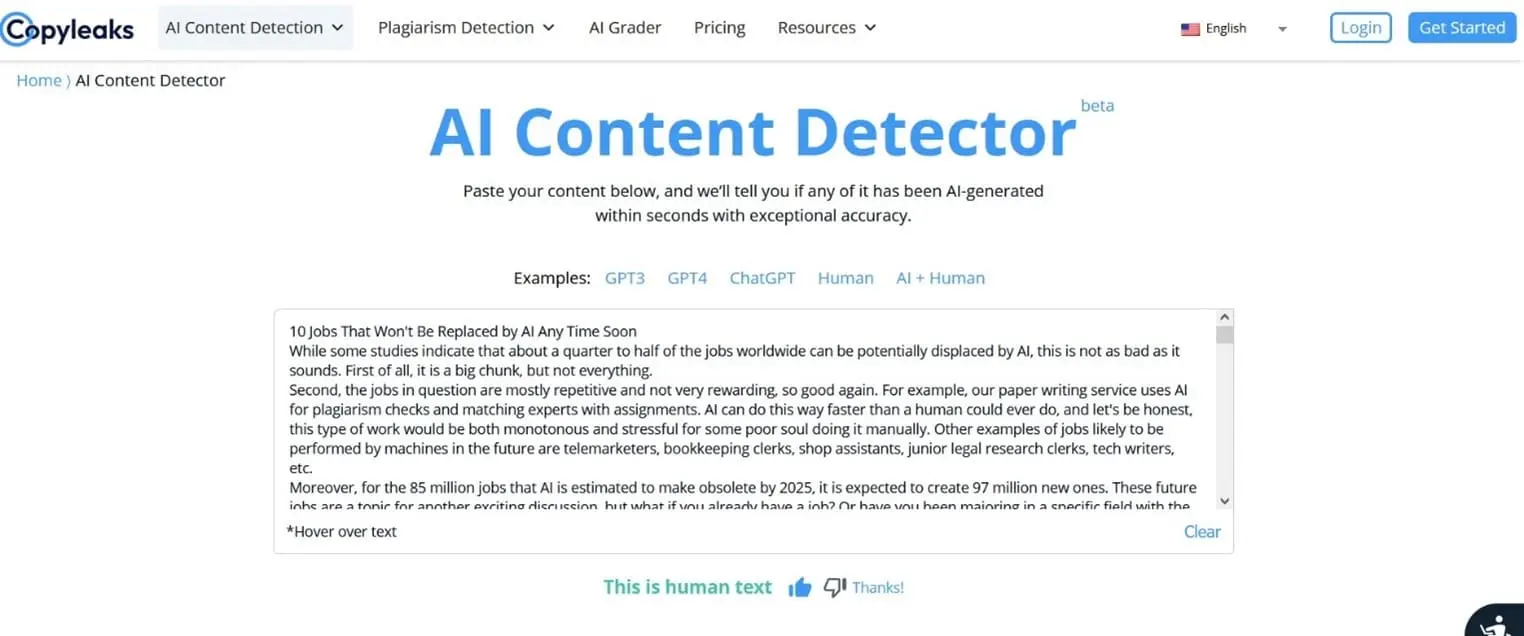

Results for human text:

Results for AI-generated text:

AI Content Detector tool at Copyleaks is currently a beta version that is free to use, and it shows impressive results with 99.12% accuracy. Not only can it clearly tell human content from non-human – it also gives you percentage probability for paragraphs and phrases if you hover over them. So far, there are no character limits on free checks. To check a piece of text, you can either copy and paste it into the window or upload a file from your device.

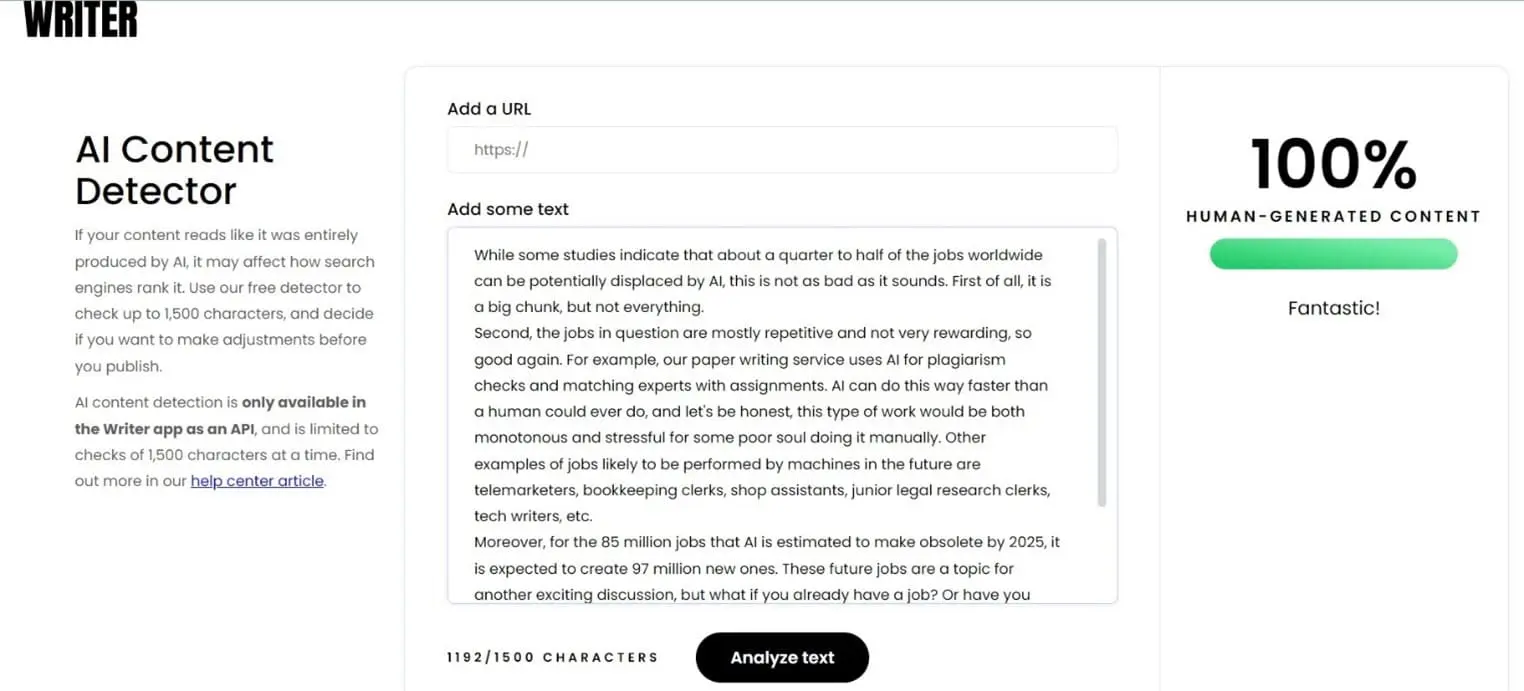

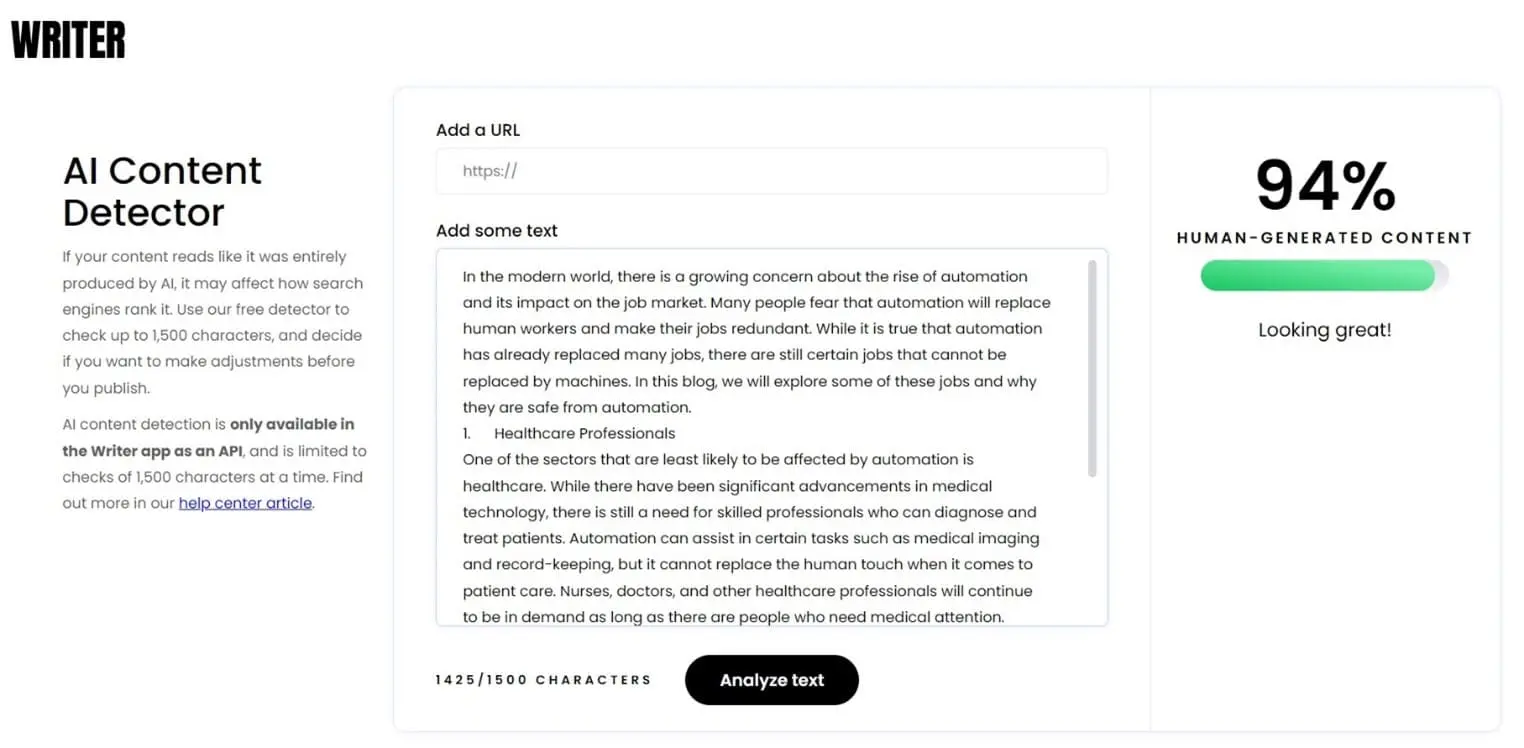

Results for human text:

Results for AI-generated text:

From what I’ve seen so far, the AI content detector at Writer.com is a bit loosey-goosey. It might show better results with text created by less sophisticated tools, but ChatGPT fools it successfully. While it detected my blog as 100% human, it also awarded a whopping 94% percent to fully AI-generated fragment. Still, it might improve its methods in the future, so you might want to keep an eye on it.

To check a piece of text, you can copy and paste it into the window or submit a URL containing the text you want to analyze. You can examine up to 1,500 characters at a time for free. For bigger chunks, you will need to buy a Team plan.

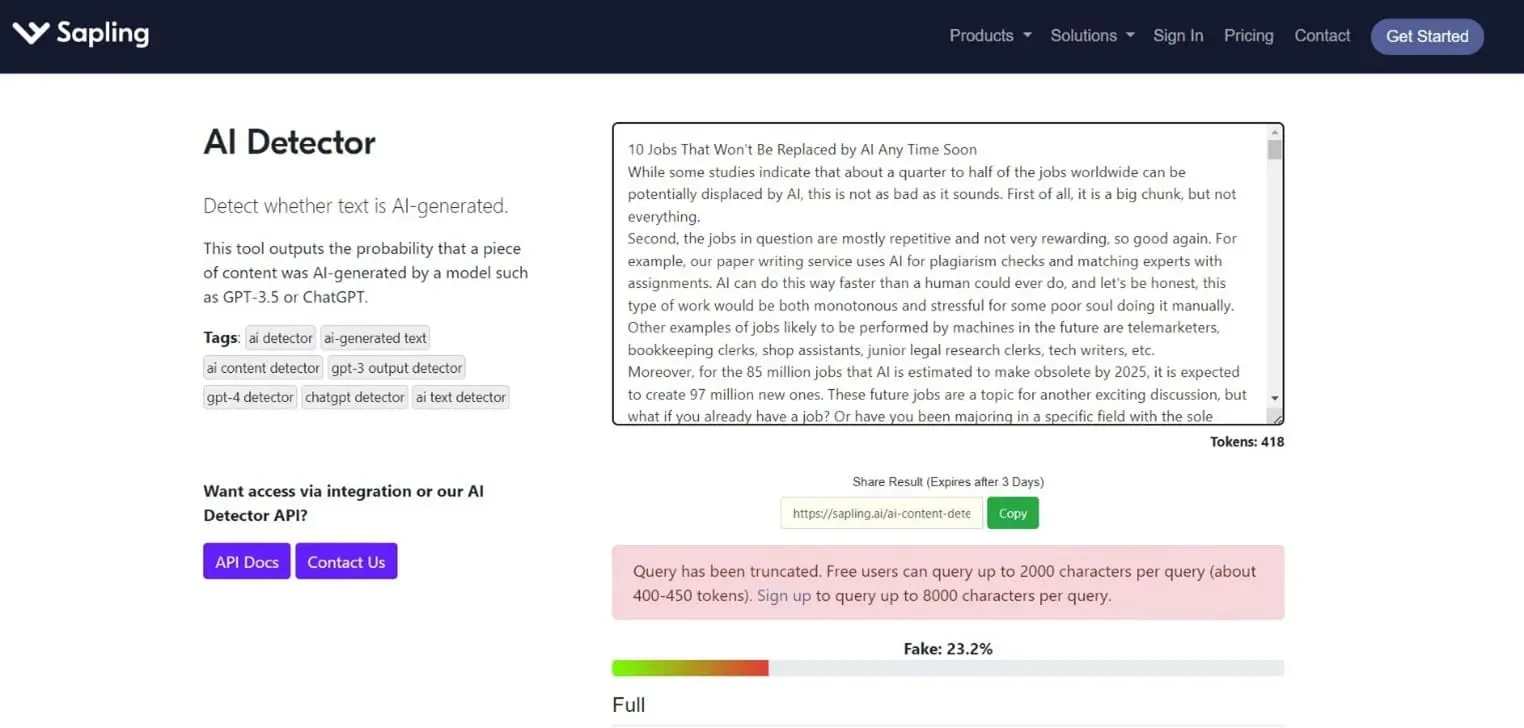

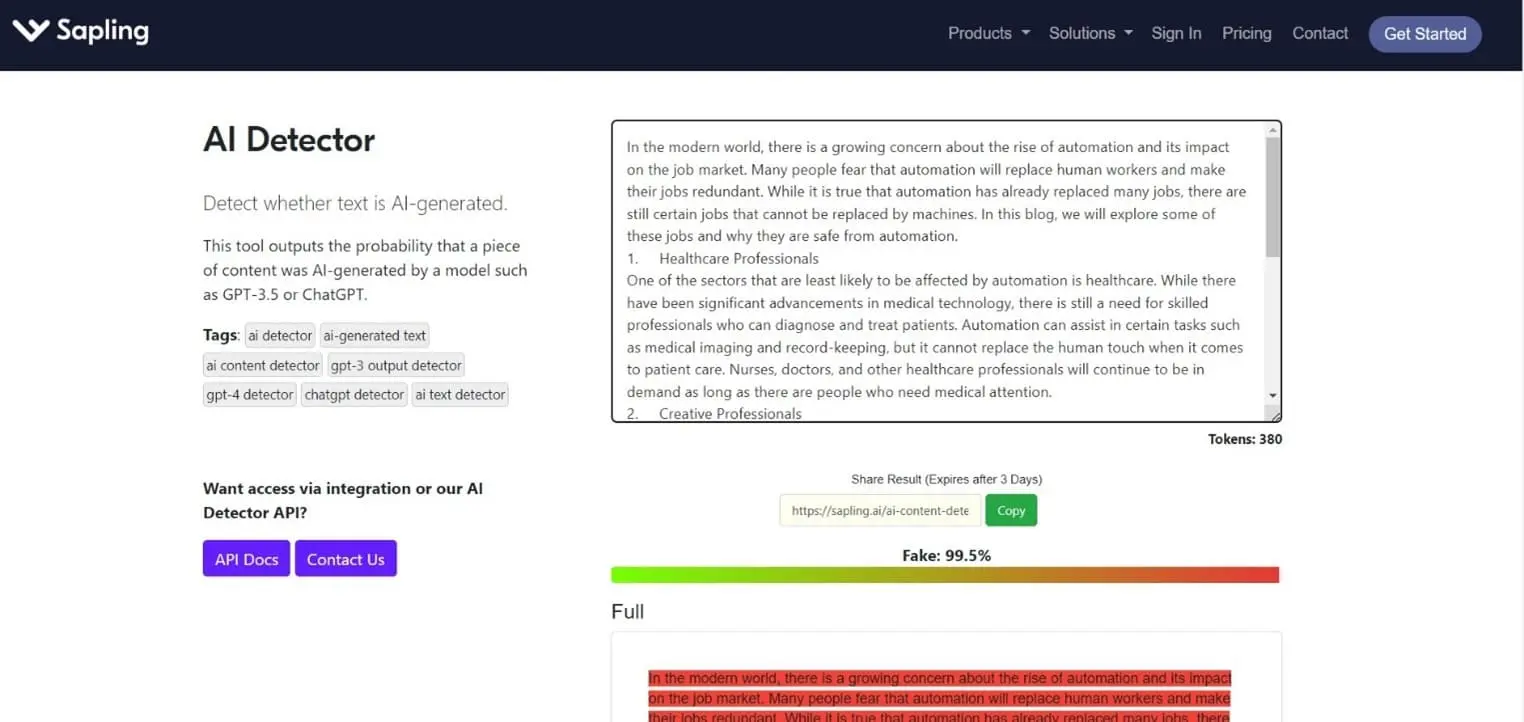

Free AI Content Detector Tool at Sapling

Results for human text:

Results for AI-generated text:

Sapling sets a high bar for texts and checks them rigorously. It estimates the percentage of “fake” content in the analyzed fragment and highlights the sentences it suspects most likely to be generated. Although it successfully detects AI-generated content, it also mislabels human-made output as partially “fake.” For example, according to Sapling’s detector, my fully manual blog about 10 jobs safe from automation is 23.2% fake. Maybe I should write in even longer sentences, idk. What do you think?

To check a piece of text, copy and paste it into the window. The free version allows you to analyze up to 2,000 characters per query.

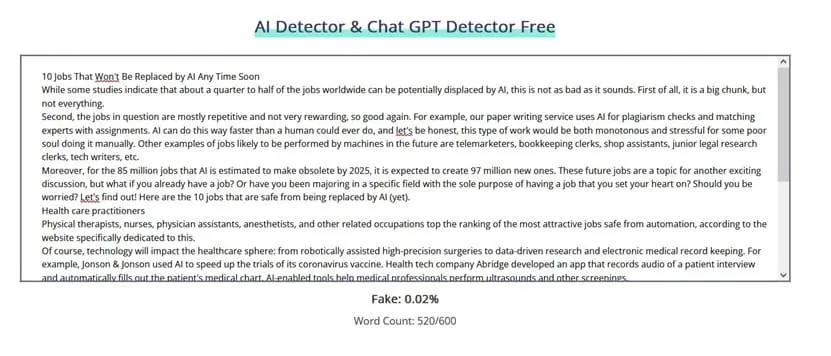

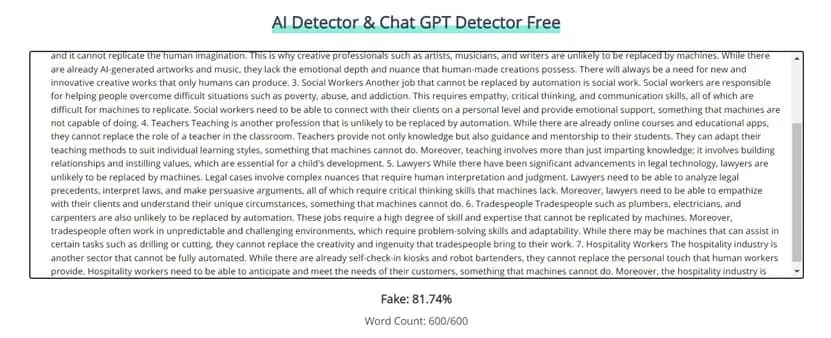

AI Content Detector at Corrector App

Results for human text:

Results for AI-generated text:

Although it doesn’t have specific metrics for perplexity or burstiness and doesn’t even highlight suspicious phrases, AI Detector at Corrector App is quite effective. It categorized my text as a mere 0.02% fake, even though I used the fragment from the top of the post, full of short sentences that other detectors were very suspicious about. At the same time, ChatGPT-generated text got an 81.74% fake rating. About 18% off, but still very impressive! Probably one of the most balanced tools on this list.

To check a piece of text, copy and paste it into the window. You can check up to 600 words per query for free.

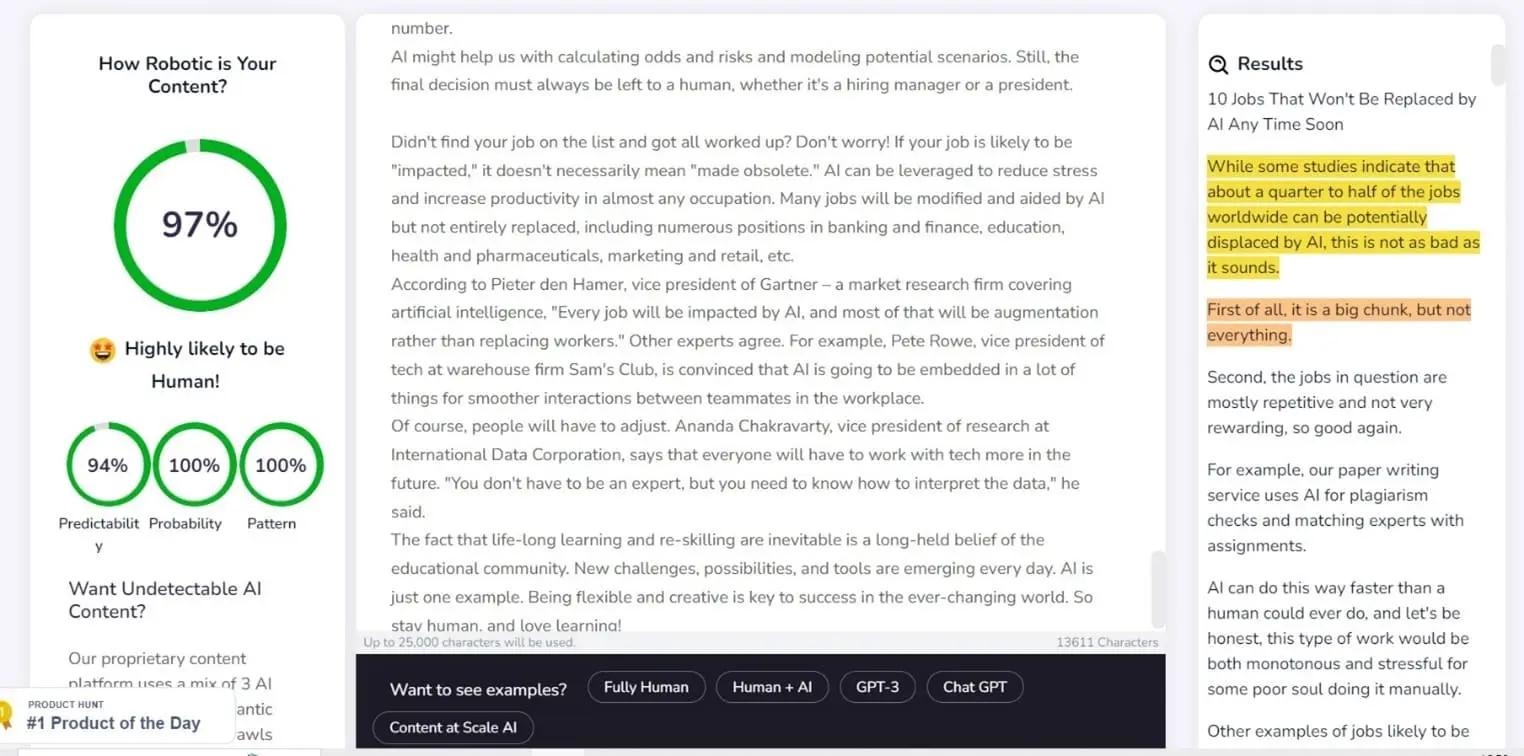

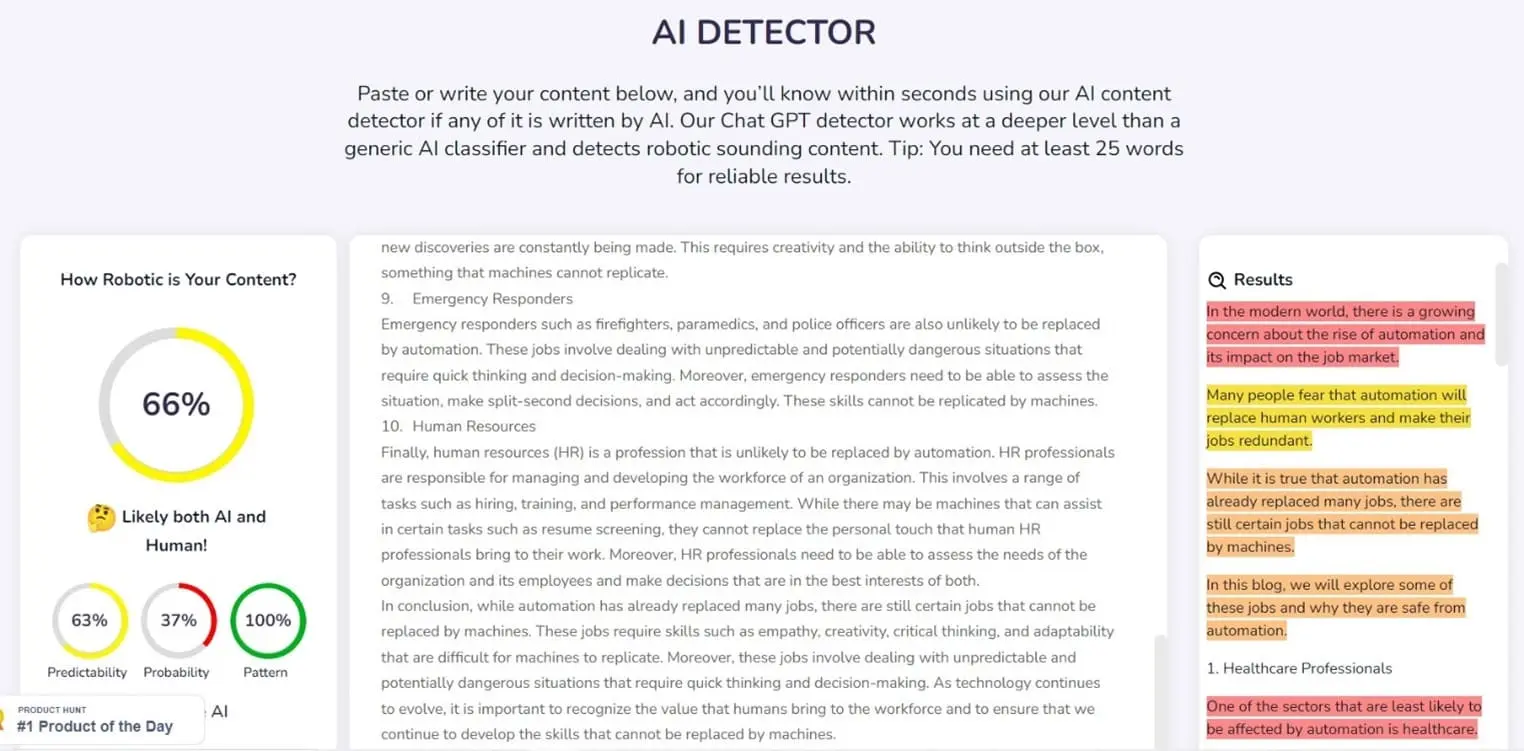

AI Detector by Content at Scale

Results for human text:

Results for AI-generated text:

The AI detector tool from Content at Scale is a bit too hesitant to attribute generated text to AI. While it gave my article a 97% probability of being human, it gave 66% to ChatGPT and assessed it as “likely both AI and human.” That said, it provides one of the most detailed analyses with different numbers for predictability, probability, pattern, highlighted phrases, total estimation in percentage, and a verbal verdict. So you can probably see for yourself if the text you analyze is iffy.

To check a piece of text, copy and paste it into the window. You can check up to 25,000 characters per query for free.

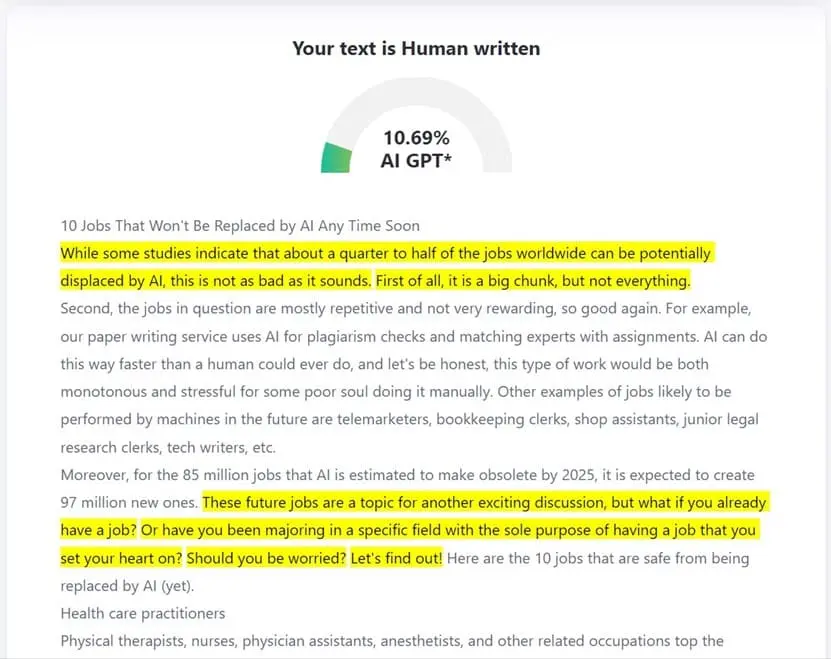

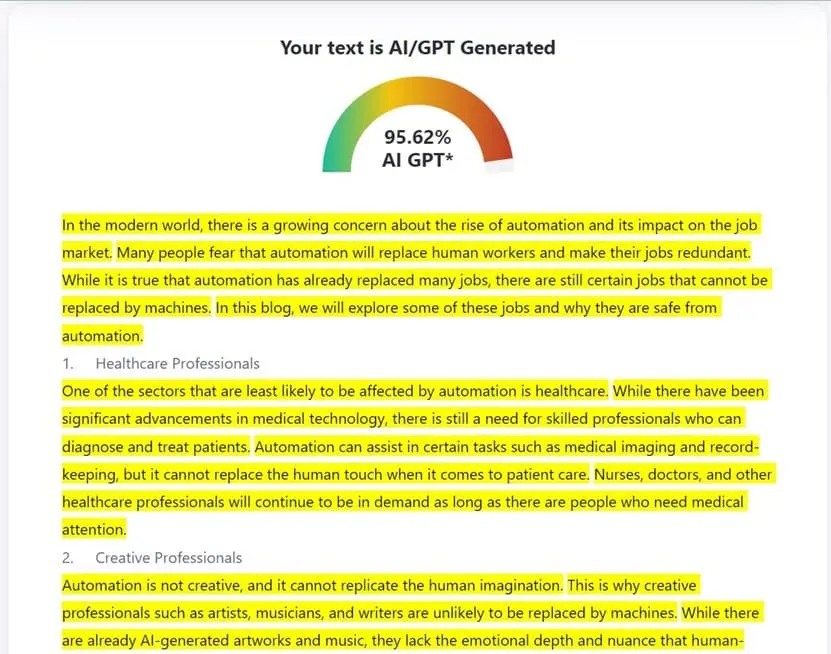

Results for human text:

Results for AI-generated text:

ZeroGPT shows similarly accurate results to Corrector App’s tool. The self-proclaimed accuracy is “above 98%.” It considered my text human, with 10.69% of phrases likely to be GPT-generated, while ChatGPT’s creation was given 95.62% and an “AI Generated” verdict. Not perfect, but good enough!

To check your text, you should paste it into the window. Currently, there is no cap on characters you can analyze per query.

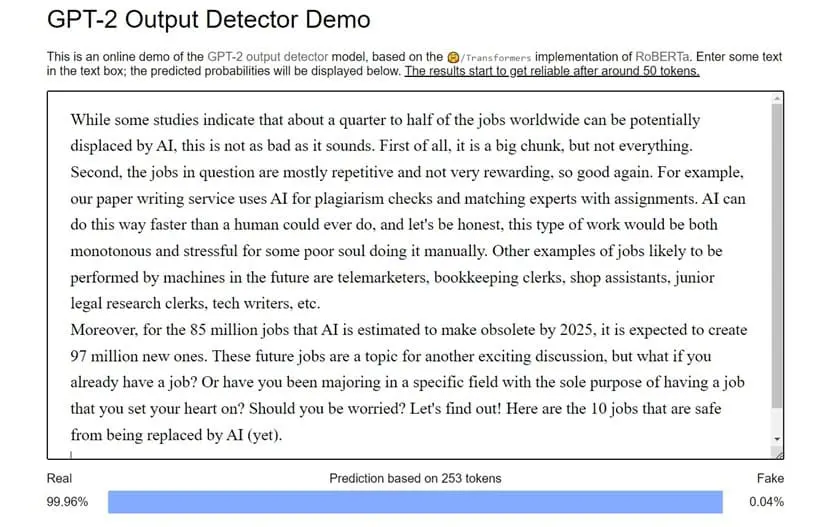

Results for human text:

Results for AI-generated text:

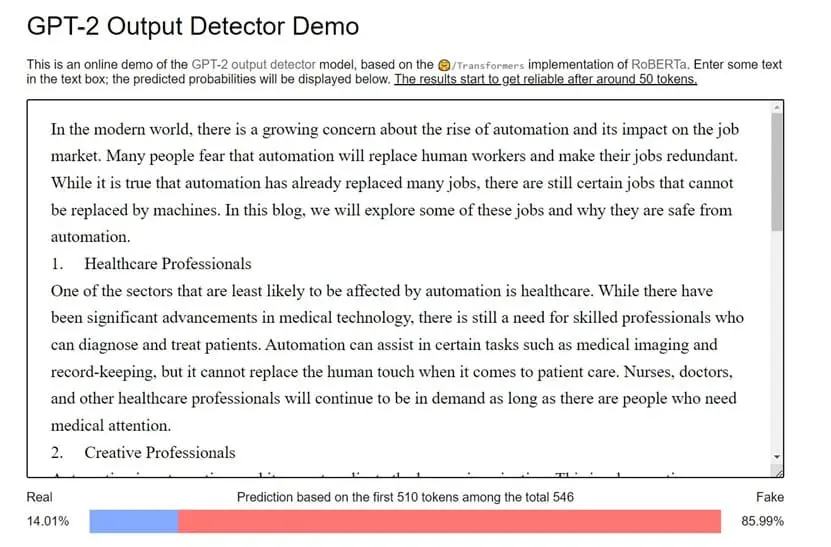

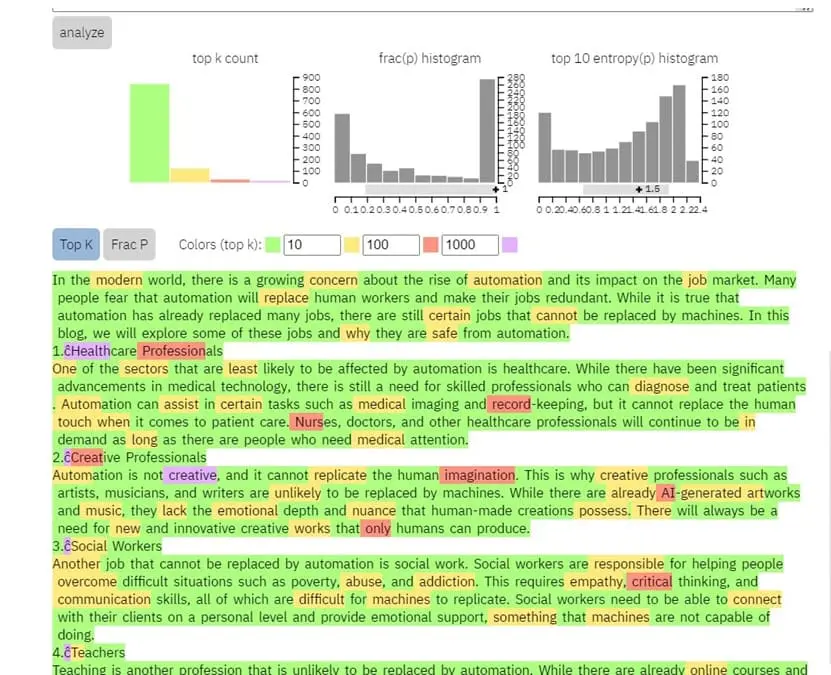

GPT-2 Output Detector Demo performs excellently even with GPT-3 output. It assessed ChatGPT’s text as 85.99% fake while giving my text a negligible 0.04% fake rating (again, for the first section of my article that most detectors tend to suspect of being generated the most). Not bad at all!

To analyze a text, you should paste it into the window. You can paste a fragment of any length, but if you submit a larger chunk, this tool might stop responding, thus processing the text only partially or refusing to return a result. It’s best to limit your queries to approximately 450 words – at least, as a free user.

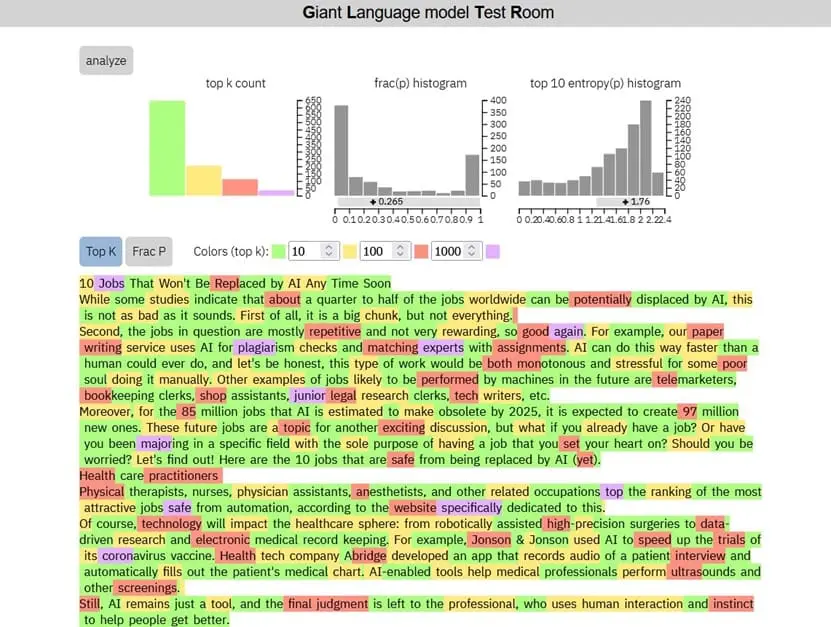

Giant Language Model Test Room (GLTR)

Results for human text:

Results for AI-generated text:

One of the most enigmatic tools on this list, GLTR, looks like an app made for professionals or at least people who know the basics of data analysis. It was created by specialists from the MIT-IBM Watson AI lab and Harvard NLP for forensic inspection of text to detect whether it’s real (human-written) or fake (AI-generated). It highlights analyzed text in color code to show the most predictable words in green and the least predictable in violet (with orange and red in between). It presents you with three histograms with frequency distributions as results. You can compare these histograms to results for sample texts: three machine samples and three human samples to see what your fragment resembles the most. No clear-cut answers or percentages, unfortunately.

All I can say is that human text seems more evenly distributed and unpredictable than ChatGPT’s. My blog resembled the most a human sample from a New York Times article, while ChatGPT’s looked like one of the successful “unicorn” models for machine text.

GLTR is definitely an excellent tool for professional and research purposes, but it might not be suitable for an average Joe and Jane. Still, it’s free and has no cap on characters, so you might want to learn how to use it.

Why AI-Assisted Writing is Problematic in Academia

AI text generation is used widely in marketing, SEO, journalism, and other areas. Google Webmaster guidelines were updated and now clearly read that the search engine isn’t against automatically generated content but against spammy AI-generated content that has no value for users and is intended only to manipulate search rankings. AI-written or AI-assisted articles appear in blogs and major news outlets, including Forbs. So why is AI so reviled in academia, and why is everyone so worked up when students want a bite of this pie too?

The problem goes beyond the inertia of the educational system. You see, if an AI-generated merchandise description or weather forecast fulfills its informative function, AI-generated student papers fail it.

The point of most academic papers is either to verify the student’s knowledge or to teach them research skills, i.e., critical thinking, working with sources, assessing the credibility of information, analysis, synthesis, etc. AI-generated papers undermine both goals.

If AI detectors fail to keep up with AI text generators, education will probably adopt a “flipped classroom” model, where students will be given topics to explore at home and then come to the classroom for examination and assessment only.

As for the research, I’m sure you are already familiar with multiple cases of AI inventing quotes, facts, and sources and citing falsehoods it found online in a credible, authoritative tone. If anything, AI made the need for human judgment and research skills even more pressing.

“Okay, but I just need to get through this course. It doesn’t have any bearing on my major or career-specific skills, only on my GPA!” I get it. Still, I wouldn’t recommend AI generation for such cases, even if you think it’s good and checks as “mostly human” by several detectors. There’s always a chance that your school might be using a more robust and nuanced subscription service trained specifically on academic texts. If you are pressed for time or facing an assignment that you see as busy work, better order a paper from a professional human writer.

How to Write to not Be Mistaken for an AI?

Okay, how can you write to avoid suspicions of AI generation? All those misattributed fragments in a fully natural text might have alarmed you. You might be tempted to start writing in ridiculously long sentences and use a thesaurus to be less predictable, but it’s not the best solution.

Short sentences and frequently used words aren’t “bad,” of course, nor are they automatically an indication that the text is generated. They make your writing more readable and easy to parse – for humans and AI alike.

However, humans are still better at keeping track of long-winded passages and unexpected epithets. It might be a bit tiresome to read a sentence that takes up an entire paragraph, but you won’t mistake a subject and the predicate with other nouns and verbs in it. AI probably will.

Editing tools like Grammarly hate long sentences with the burning passion of a thousand desert suns. They suggest, in a friendly tone, splitting longer sentences into smaller ones for better readability because “it can be hard for an average reader to understand.” Still, I always suspected the AI editors themselves simply could not make heads or tails of long sentences. Therefore, they ask you to split them in order to parse and check them.

If there is any advice I can give you to keep your writing more human, it’s always using your own judgment when an AI editor suggests you an “improvement.” Automated editors like Grammarly and Hemingway are good at spelling and grammar (to an extent), but don’t let them dictate your style.

Just be yourself and write in your own voice (unless you suspect you might be a rogue replicant on the run). If you write naturally, some percentage of your text might look suspicious to AI detectors. Yet you cannot write to please AI – your first worry should be your human readers!